I had an opportunity to speak at the very first BMC India Technical Event held in Bangaluru on June 11th, 2009. At this event I talked about the changing landscape of data centers. This article is an excerpt of the talk intended to facilitate understanding of the presentation. The entire presentation is available here.

There are many factors causing the landscape of data centers to change. There are some disruptive technologies at play namely Virtualization and Cloud Computing. Virtualization has been around for a while but only recently it has risen to the level of making significant impact to data centers. Virtualization has come a long way since VMware first introduced VMware Workstation in 90s. The product was initially designed to ease software development and testing by partitioning a workstation into multiple virtual machines.

The virtual machine software market space has seen a substantial amount of evolution, The Xen® hypervisor, the powerful open source industry standard for virtualization. To vSphere, the first cloud operating system, transforming IT infrastructures into a private cloud-a collection of internal clouds federated on-demand to external clouds. Hardware vendors are also not too behind. Intel/AMD and other hardware vendors are pumping in lot of R&D dollars to make their chipsets and hardware optimized for hypervisor layer.

According to IDC more than 75% companies with more than 500 employees are deploying virtual servers. As per a survey by Goldman Sach’s 34 per cent of servers will be virtualized within the next 12 months among Fortune 1000 companies, double the current level of 15 per cent.

Cloud computing similarly existed as a concept for many years now. However various factors finally coming together that are now making it ripe for it to have the most impact. Bandwidth has been increasing significantly across the world that enables faster access to applications in the cloud. Thanks to success of SaaS companies, comfort level of having sensitive data out of their direct physical control is increasing.

There is increasing need for remote work force. Applications that used to reside on individual machines now need to be centralized.

Economy is pushing costs to go down. Last but not least, there is an increasing awareness about going green.

All these factors are causing the data center landscape to change. Now let’s look at some of the ways that the data centers are changing.

Data centers today are becoming much more agile. They are quick, light, easy to move and nimble. One of the reasons for this is that in today’s data center, virtual machines can be added quickly as compared to procuring and provisioning a physical server.

Self service provisioning allows end-users to quickly and securely reserve resources and automates the configuration and provisioning of those physical and virtual servers without administrator intervention. Creating a self-service application and pooling resources to share across teams not only optimizes utilization and reduces needless hardware spending but it also improves time to market and increases IT productivity by eliminating mundane and time consuming tasks.

Public clouds have set new benchmarks. E.g. Amazon EC2 SLA for availability is 99.95% which raised the bar from traditional data center availability SLA significantly. Most recently another vendor, 3Tera came out with five nines, 99.999% availability. Just to compare Amazon and 3Tera, 99.999% availability translates into 5.3 minutes of downtime each year, the different in cost between five 9’s and four 9’s (99.99 percent, or 52.6 minutes of downtime per year) can be substantial.

Data centers are also becoming more scalable. With virtualization, a data center may have 100 physical servers that are servicing 1000 virtual servers for your IT. Once again due to Virtualization, data centers are no longer constrained due to physical space or power/cooling requirements.

The scalability requirements for data centers are also changing. Applications are becoming more computation and storage hungry. Example of computation sensitive nature of apps, enabling a sub-half-second response to an ordinary Google search query involves 700 to 1,000 servers! Google has more than 200,000 servers, and I’d guess it’s far beyond that and growing every day.

Or another example is Facebook, where more than 200 million photos are uploaded every week. Or Amazon, where post holiday season their data center utilization used to be <10%! Google Search, Facebook and Amazon are not one off examples of applications. More and more applications will be built with similar architectures and hence the data center that hosts/supports those applications would need to evolve.

Data center are becoming more fungible. What that means is that resources used within the data centers are becoming easily replaceable. Earlier when you procured a server, chances were high that it will be there for number of years. Now with virtual servers, they will get created, removed, reserved and parked in your data center!

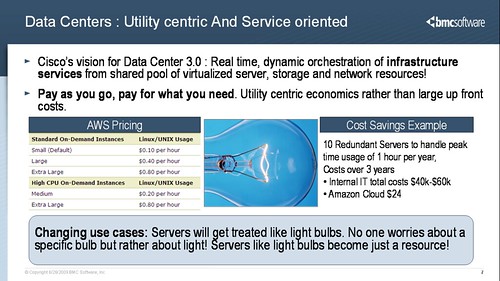

Data centers are becoming more Utility Centric and service oriented. As an example look at Cisco‘s definition of Data Center 3.0 where it calls it infrastructure services. Data center users are increasingly going to demand pay as you go and pay for what you use type of pricing. Due to various factors, users are going to cut back on large upfront capital expenses and instead going to prefer smaller/recurring operating expenses.

Most organizations have either seasonal peaks or daily peaks (or both) with a less dramatic cost differential; but the cost differential is still quite dramatic and quite impactful to the bottom line. In addition, the ability to pay for what you use makes it easy to engage in “proofs of concept” and other R&D that requires dedicated hardware.

- As the discrepancy between peak usage and standard usage grows, the cost difference between the cloud and other options becomes overwhelming.

Technology is changing; the business needs are changing, with changing times organization’s social responsibilities are changing. More and more companies are thinking about the impact they have on the environment. Data centers become major source of environment impact especially as they grow in size.

A major contributor to excessive power consumption in the data center is over provisioning. Organizations have created dedicated, silo-ed environments for individual application loads, resulting in extremely low utilization rates. The result is that data centers are spending a lot of money powering and cooling many machines that individually aren’t doing much useful work.

Cost is not the only problem. Energy consumption has become a severe constraint on growth. In London, for example, there is now a moratorium on building new data centers because the city does not have the electrical capacity to support them!

Powering one server contributes to on an average 6 Tons of carbon emissions (depending upon the location of the server and how power is generated in that region) It is not too farfetched to claim that every data center has some servers that are always kept running because no one knows what business services depend on them but in reality no one seems to be using them. Even with the servers that are being used, there is an opportunity to increase their utilization and consolidate them.

Now that we have seen some of the ways that the data centers are changing, I am going to shift gears and talk about evolution of data centers. I am going to use the analogy of evolution of web to changing landscape of data centers. Just like web evolved from Web 1.0 where everyone could access, to Web 2.0 where people started contributing to Web 3.0 where the mantra is everyone can innovate.

Applying this analogy to Data Centers we can see how it has evolved from its early days of existence to where we are today,

Using the analogy of Web world, we can see how data centers have evolved from their early days till now.

- In the beginning, Data centers were nothing but generic machines stored together. From there it evolved to blade servers that removed some duplicate components and optimized. Now in DC3.0, they are becoming even more virtual and cloud based.

- So from mostly physical servers we have moved to Physical and Virtual servers to now where we would even treat underlying resources as virtual.

- Provision time has gone down significantly

- User participation has changed

- Management tools that used to be nice to have are playing a much important role and are becoming mandatory. Good example once again is UCS where Bladelogic Mgmt tool will be pre-installed!

- The role of a data center admin itself has changed from mostly menial work into a much more sophisticated one!

Slideshow for “Changing Landscape of Data Centers”

If you cannot see the slideshow above, click here.

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_b.png?x-id=a6ce1607-1a6f-4e70-b4f9-1f26c9a9db6c)

>>>>

Data center are becoming more fungible. What that means is that resources used within the data centers are becoming easily replaceable. Earlier when you procured a server, chances were high that it will be there for number of years. Now with virtual servers, they will get created, removed, reserved and parked in your data center!

>>>>>

With data centres going the ‘on-demand’ way via Clouds how far are we from ‘on-demand’ organizations – limited captive workforce and a large chunk of mobile professionals providing their services on demand – compute professionals from opensource world and otherwise? 5 yrs? 10 yrs? Virtual workforce?

@Shalini,

That is an interesting question. I think the push towards virtual workforce will be guided by the changes happening in the way we perceive/run businesses.

I would like to use an analogy here of electrical grid. We take it for granted that electricity will be available in our house, on-demand. We don’t have to invest anything in getting the electricity to our house. However if some infrastructure problem arises in our own home, we look up the electrician number posted on our refridgerators and call him/her on a per need basis.

If this analogy is a bit far fetched, here is an IT related example. Most recently, (in my prior company) we switched from Microsoft Exchange to SaaS Gmail for our email needs. This enabled us to use Gmail support services on demand and only when needed.

As more and more business services go this way, slowly and surely we will start seeing virtual workforce coming into picture.

Thanks,

-Suhas

Hi Suhas,

What form does the “dynamic and transparent provisioning” of resources in the DC 3.0 vision take?

Will this transparency be in terms of migration of VM’s to appropriate hosts based on a performance metrics feedback loop and the VM’s base requirements?

Thanks,

Abhijit

@Abhijit,

Yes your VM example is just one of the scenarios of dynamic and transparent provisioning. There will be more such scenarios such as follows,

– Cisco UCS can dynamically configure a blade with appropriate amount of memory, network, storage resources to satisfy application needs

Or,

– A public cloud vendor (e.g. Amazon AWS) can elastically provide more computing resources as per the increasing demand

-Suhas

Hi Suhas,

The platforms you mention like EC2, Cisco UCS can scale-up or scale-down resources as desired – but where should the trigger to execute these originate from? Is it the application’s responsibility to monitor itself and trigger the platform when it deems necessary ? Or are there some tools/products/monitors out there that do this across applications ? This may be difficult too as probably an application is best aware of it’s trigger points.

Regards

Abhijit

Hi Abhijit,

My first reaction is that the infrastructure should adapt to the changing needs of the application and that application should not be aware of the infrastructure details. This is where SMART infrastructure management tools come into picture (get my hint ;))

Having said this, tomorrow’s applications would also need to be designed in a way that it can take advantage of infinite computing power via techniques like MapReduce.

So in lawyer terms answer to your question is, “it depends!” 🙂

-Suhas

What’s your take on where Indian data centers fall in this — those operated by telcos, ISPs, independent companies, etc.

Are they evolving fast enough and providing the latest cloud offerings to their customers, or are they behind the times?

@DJ,

Based on my observations on IT orgs based in India, I think they are right up there with rest of the world in terms of adopting new technologies. Some of it is driven by rapid expansion needs, e.g. I recently talked to an IT Head of one of the largest mobile operators in India and he was complaining (?) that their customer base has tripled in last year (many millions subscribers) and he is having hard time scaling their IT Infrastructure to meet up this increasing demand. This is a perfect example where companies are going to adopt newer technologies at an accelerated rate!

-Suhas

Dear Suhas,

Nice article. Indeed we are slowly transitioning into ‘Pay as you Go’ kinda mentality in IT industry. However to add up, we need to decide the right set of scenarios for cloud computing, which in my humble opinion are still missing.

I also feel that everyone is trying to jump on the cloud, web3.0 wagon, however without the right set of scenarios, requirements and architecture.

Lastly am not pretty much convinced with Web3.0 and PAAS as these are much different from another and to me Web3.0 is more about adding the artificial intelligence to it.