In the recent Hype Cycle for Cloud Computing 2009 special report by Gartner, technologies at the ‘Peak of Inflated Expectations’ include Cloud Computing! (For description of five phases of Hype Cycle look here) This means that Cloud Computing is on the verge of entering the “Trough of Disillusionment” phase. Many technologies have been unable to come out of this dreaded trough where they fail to meet expectations and quickly become unfashionable. Articles such as “Could the cloud lead to an even bigger 9/11” clearly indicate that Gartner’s analysis is right and that cloud computing indeed has reached the peak of hype!

This article has my musings on why cloud computing will eventually come out of this phase and would reshape the way we run business.

I had an opportunity to attend VmWorld 2009 conference. During the course of this conference, VmWare announced its latest initiative, vCloud. vCloud is essentially using VmWare’s virtualization technology to create an ecosystem of cloud service providers. With this initiative VmWare joins already crowded space of public cloud providers such as Amazon, Rackspace Cloud and Savvis. Out of all the exhibitors at the VmWorld conference, almost everyone was trying to get on the bandwagon of Cloud Computing. And this was not even a Cloud Computing focused conference! The more you look into Cloud Computing the more you feel like it is indeed the next big thing after the internet gold rush of 90s.

All this hype for Cloud Computing feels like a déjà vu. Turn the dial few years ago and the area of Software As A Service (SaaS) went through very similar transition. After SaaS reached the trough of disillusionment skeptics were raising doubts. Many argued that they would never consider putting their competitive data (CRM) in a software system outside of their corporate networks. Salesforce had to fight an uphill battle as it tried to establish its SaaS products. However the value proposition of SaaS, in terms of zero install and pay-as-you-go was too attractive to ignore. Today SaaS is the architecture of choice for many enterprise software products and last time I checked Salesforce is sitting pretty at a massive market cap of 7.13 billion dollars!

Let’s look at the benefits of Cloud Computing,

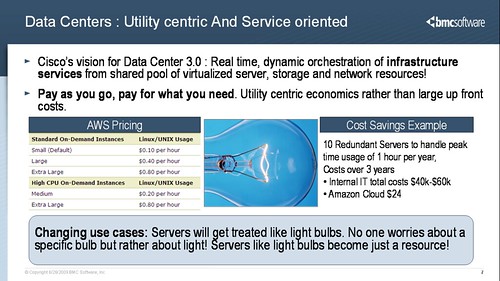

- Lower Costs – OPEX not CAPEX: Cloud Computing avoids capital expenditure (CapEx) on hardware, software and services by renting it from a third party provider (such as Amazon). Consumption is usually billed on a utility (resource based like electricity) or subscription (time based, like a monthly cable subscription) basis with little or no upfront cost. You pay as you go and pay for what you need. This seemingly straight forward benefit has deep impact on business models and strategy.

- Self service and Agility: Provisioning a server used to take days if not weeks. With Amazon you can procure a server on their public cloud in minutes! Users can generally terminate the contract at any time (improving ROI and eliminating financial risks), and the services are often covered by service level agreements (SLAs) with financial penalties.

- Focus on your business: Cloud computing abstracts away underlying resources (server, network and storage) and management of it so that you can focus on your core business. Win-win for Providers and Consumers.

- Elastic Scalability: Hosting your applications on Cloud Infrastructure enable dynamic (“on-demand”) provisioning of resources that can be done at near real time, without having to waste server resources engineered for peak loads. This enables small business to start offering their services on the web with low entry barriers and then scale as and when their load demands are higher.

Cloud Infrastructure and services are by default multi-tenant enabled, with multiple customers sharing resources and the costs associated with these. Providers run centralized infrastructure at low cost locations and make use of expertise of providers in terms of utilization and efficiency of infrastructure. Providers benefit with increased efficiency due to economies of scale and are able to provide the same service at lesser costs to happy consumers.

Consider for example that you want to start a small web based business selling toys. Your business plan calls for exponential growth with number of customers ramping from few hundred in the first year to thousands in 2-3 years to million plus in 5-7 years. Ofcourse this plan does not even include wild fluctuations during peak holiday seasons. Until today, planning for this type of scenario involved lot of upfront costs that created huge barriers of entry for start ups. Now with cloud computing and public cloud infrastructure, such small companies can dream of doing exactly what they want to do and provides them with unlimited elasticity!

Similar to SaaS success story, it will be the benefits of the “cloud” that will eventually win over the skeptics due to underlying benefits. Of course an important factor would also be for an eco system to evolve in a timely fashion. One of the reasons why SaaS was successful was the fact that an entire ecosystem made itself available that rendered well to the SaaS Model including Web Standards (SOAP, WSDL, UDDI) and architectures such as AJAX.

Similar to the platform wars of the eighties (followed by browser wars of nineties), Cloud Computing is currently going through a war with each player trying to establish itself as the destination. Some efforts have started to promote interoperability and openness of cloud. Open Cloud Initiative is one such example. However it remains to be seen how the industry as a whole matures and adopts such efforts…

Cloud computing is here to stay and will succeed as a concept eventually. It has the power to establish new business models and change existing processes. More will have to be written about what does it mean for enterprises of tomorrow to manage their businesses in cloud. Do provide feedback via your comments if you would like to hear about it more…

See also: Suhas’ previous PuneTech article: The Changing Landscape of Data Centers.

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_b.png?x-id=2f1b8836-1c22-4547-8b20-32126d84e047)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_b.png?x-id=a6ce1607-1a6f-4e70-b4f9-1f26c9a9db6c)

![[Image: Basic cloud computing architectures config #1 to #3]](http://farm4.static.flickr.com/3191/2706605751_1da653b46a_o.png)

![[Image: Cloud computing HA configuraion #4]](http://farm4.static.flickr.com/3221/2706606111_48a075ce8f_o.png)

![[Image: Cloud computing Hot Standby Config #5]](http://farm4.static.flickr.com/3164/2707423692_f80478a7cc_o.png)

![[Image: Cloud computing multi-tier config #6]](http://farm4.static.flickr.com/3015/2707423842_ee81cf527e_o.png)

![[Image: Multi-tier cloud computing with HA]](http://farm4.static.flickr.com/3048/2706605363_0ae1236762_o.png)