(PuneTech is honored to have Dr. Narayan Venkatasubramanyan, an Optimization Guru and one of the original pioneers in applying Optimization to Supply Chain Management, as our contributor. I had the privilege of working closely with Narayan at i2 Technologies in Dallas for nearly 10 years.

PuneTech has published some introductory articles on Supply Chain Management (SCM) and the optimization & decision support challenges involved in various real world SCM problems. Who better to write about this area in further depth than Narayan!

For Dr. Narayan Venkatasubramanyan’s detailed bio, please click here.

This is the first in a series of articles that we will publish once a week for a month. For the full series of articles, click here.)

the following entry was prompted by a request for an article on the topic of “optimization” for publication in punetech.com, a website co-founded by amit paranjape, a friend and former colleague. for reasons that may have something to do with the fact that i’ve made a living for a couple of decades as a practitioner of that dark art known as optimization, he felt that i was best qualified to write about the subject for an audience that was technically savvy but not necessarily aware of the application of optimization. it took me a while to overcome my initial reluctance: is there really an audience for this after all, even my daughter feigns disgust every time i bring up the topic of what i do. after some thought, i accepted the challenge as long as i could take a slightly unusual approach to a “technical” topic: i decided to personalize it by rooting in a personal-professional experience. i could then branch off into a variety of different aspects of that experience, some technical, some not so much. read on …

background

the year was 1985. i was fresh out of school, entering the “real” world for the first time. with a bachelors in engineering from IIT-Bombay and a graduate degree in business from IIM-Ahmedabad, and little else, i was primed for success. or disaster. and i was too naive to tell the difference.

for those too young to remember those days, 1985 was early in rajiv gandhi‘s term as prime minister of india. he had come in with an obama-esque message of change. and change meant modernization (he was the first indian politician with a computer terminal situated quite prominently in his office). for a brief while, we believed that india had turned the corner, that the public sector companies in india would reclaim the “commanding heights” of the economy and exercise their power to make india a better place.

CMC was a public sector company that had inherited much of the computer maintenance business in india after IBM was tossed out in 1977. quickly, they broadened well beyond computer maintenance into all things related to computers. that year, they recruited heavily in IIM-A. i was one of an unusually large number of graduates who saw CMC as a good bet.

not too long into my tenure at at CMC, i was invited to meet with an mid-level manager in electronics & telecommunications department of the oil and natural gas commission of india (ONGC). the challenge he posed us was simple: save money by optimizing the utilization of helicopters in the bombay high oilfield.

the problem

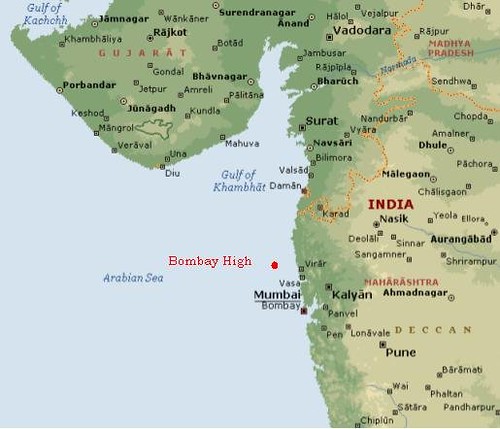

the bombay high oilfield is about 100 miles off the coast of bombay (see map). back then, it was a collection of about 50 oil platforms, divided roughly into two groups, bombay high north and bombay high south.

(on a completely unrelated tangent: while writing this piece, i wandered off into searching for pictures of bombay high. i stumbled upon the work of captain nandu chitnis, ex-navy now ONGC, biker, amateur photographer … who i suspect is a pune native. click here for a few of his pictures that capture the outlandish beauty of an offshore oil field.)

movement of personnel between platforms in each of these groups was managed by a radio operator who was centrally located.

all but three of these platforms were unmanned. this meant that the people who worked on these platforms had to be flown out from the manned platforms every morning and brought back to their base platforms at the end of the day.

at dawn every morning, two helicopters, flew out from the airbase in juhu, in northwestern bombay. meanwhile, the radio operator in each field would get a set of requirements of the form “move m men from platform x to platform y”. these requirements could be qualified by time windows (e.g., need to reach y by 9am, or not available for pick-up until 8:30am) or priority (e.g., as soon as possible). each chopper would arrive at one of the central platforms and gets its instructions for the morning sortie from the radio operator. after doing its rounds for the morning, it would return to the main platform. at lunchtime, it would fly lunchboxes to the crews working at unmanned platforms. for the final sortie of the day, the radio operator would send instructions that would ensure that all the crews are returned safely to their home platforms before the chopper was released to return to bombay for the night.

the challenge for us was to build a computer system that would optimize the use of the helicopter. the requirements were ad hoc, i.e., there was no daily pattern to the movement of men within the field, so the problem was different every day. it was believed that the routes charted by the radio operator were inefficient. given the amount of fuel used in these operations, an improvement of 5% over what they did was sufficient to result in a payback period of 4-6 months for our project.

- Image by Captain Nandu Chitnis, Master Mariner via Flickr

this was my first exposure to the real world of optimization. a colleague of mine — another IIM-A graduate and i — threw ourselves at this problem. later, we were joined yet another guy, an immensely bright guy who could make the lowly IBM PC-XT — remember, this was the state-of-the-art at that time — do unimaginable things. i couldn’t have asked to be a member of a team that was better suited to this job.

the solution

we collected all the static data that we thought we would need. we got the latitude and longitude of the on-shore base and of each platform (degrees, minutes, and seconds) and computed the distance between every pair of points on our map (i think we even briefly flirted with the idea of correcting for the curvature of the earth but decided against it, perhaps one of the few wise moves we made). we got the capacity (number of seats) and cruising speed of each of the helicopters.

we collected a lot of sample data of actual requirements and the routes that were flown.

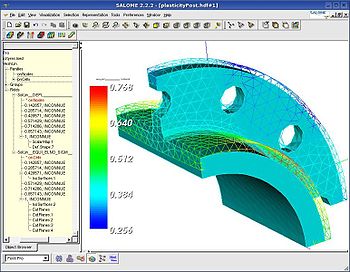

we debated the mathematical formulation of the problem at length. we quickly realized that this was far harder than the classical “traveling salesman problem”. in that problem, you are given a set of points on a map and asked to find the shortest tour that starts at any city and touches every other city exactly once before returning to the starting point. in our problem, the “salesman” would pick and/or drop off passengers at each stop. the number he could pick up was constrained, so this meant that he could be forced to visit a city more than once. the TSP is known to be a “hard” problem, i.e., the time it takes to solve it grows very rapidly as you increase the number of cities in the problem. nevertheless, we forged ahead. i’m not sure if we actually completed the formulation of an integer programming problem but, even before we did, we came to the conclusion that this was too hard of a problem to be solved as an integer program on a first-generation desktop computer.

instead, we designed and implemented a search algorithm that would apply some rules to quickly generate good routes and then proceed to search for better routes. we no longer had a guarantee of optimality but we figured we were smart enough to direct our search well and make it quick. we tested our algorithm against the test cases we’d selected and discovered that we were beating the radio operators quite handily.

then came the moment we’d been waiting for: we finally met the radio operators.

they looked at the routes our program was generating. and then came the first complaint. “your routes are not accounting for refueling!”, they said. no one had told us that the sorties were long enough that you could run out of fuel halfway, so we had not been monitoring that at all!

- ONGC’s HAL Dhruv Helicopters on sorties off the Mumbai coast. Image by Premshree Pillai via Flickr

so we went back to the drawing board. we now added a new dimension to the search algorithm: it had to keep track of fuel and, if it was running low on fuel during the sortie, direct the chopper to one of the few fuel bases. this meant that some of the routes that we had generated in the first attempt were no longer feasible. we weren’t beating the radio operators quite as easily as before.

we went back to the users. they took another look at our routes. and then came their next complaint: “you’ve got more than 7 people on board after refueling!”, they said. “but it’s a 12-seater!”, we argued. it turns out they had a point: these choppers had a large fuel tank, so once they topped up the tank — as they always do when they stop to refuel — they were too heavy to take a full complement of passengers. this meant that the capacity of the chopper was two-dimensional: seats and weight. on a full tank, weight was the binding constraint. as the fuel burned off, the weight constraint eased; beyond a certain point, the number of seats became the binding constraint.

we trooped back to the drawing board. “we can do this!”, we said to ourselves. and we did. remember, we were young and smart. and too stupid to see where all this was going.

in our next iteration, the computer-generated routes were coming closer and closer to the user-generated ones. mind you, we were still beating them on an average but our payback period was slowly growing.

we went back to the users with our latest and greatest solution. they looked at it. and they asked: “which way is the wind blowing?” by then, we knew not to ask “why do you care?” it turns out that helicopters always land and take-off into the wind. for instance, if the chopper was flying from x to y and the wind was blowing from y to x, the setting was perfect. the chopper would take off from x in the direction of y and make a bee-line for y. on the other hand, if the wind was also blowing from x to y, it would take off in a direction away from y, do a 180-degree turn, fly toward and past y, do yet another 180-degree turn, and land. given that, it made sense to keep the chopper generally flying a long string of short hops into the wind. when it could go no further because they fuel was running low or it needed to go no further in that direction because there were no passengers on board headed that way, then and only then, did it make sense to turn around and make a long hop back.

“bloody asymmetric distance matrix!”, we mumbled to ourselves. by then, we were beaten and bloodied but unbowed. we were determined to optimize these chopper routes, come hell or high water!

so back we went to our desks. we modified the search algorithm yet another time. by now, the code had grown so long that our program broke the limits of the editor in turbo pascal. but we soldiered on. finally, we had all of our users’ requirements coded into the algorithm.

or so we thought. we weren’t in the least bit surprised when, after looking at our latest output, they asked “was this in summer?”. we had now grown accustomed to this. they explained to us that the maximum payload of a chopper is a function of ambient temperature. on the hottest days of summer, choppers have to fly light. on a full tank, a 12-seater may now only accommodate 6 passengers. we were ready to give up. but not yet. back we went to our drawing board. and we went to the field one last time.

in some cases, we found that the radio operators were doing better than the computer. in some cases, we beat them. i can’t say no creative accounting was involved but we did manage to eke out a few percentage point of improvement over the manually generated routes.

epilogue

you’d think we’d won this battle of attrition. we’d shown that we could accommodate all of their requirements. we’d proved that we could do better than the radio operators. we’d taken our machine to the radio operators cabin on the platform and installed it there.

we didn’t realize that the final chapter hadn’t been written. a few weeks after we’d declared success, i got a call from ONGC. apparently, the system wasn’t working. no details were provided.

i flew out to the platform. i sat with the radio operator as he grudgingly input the requirements into the computer. he read off the output from the screen and proceeded with this job. after the morning sortie was done, i retired to the lounge, glad that my work was done.

a little before lunchtime, i got a call from the radio operator. “the system isn’t working!”, he said. i went back to his cabin. and discovered that he was right. it is not that our code had crashed. the system wouldn’t boot. when you turned on the machine, all you got was a lone blinking cursor on the top left corner of the screen. apparently, there was some kind of catastrophic hardware failure. in a moment of uncommon inspiration, i decided to open the box. i fiddled around with the cards and connectors, closed the box, and fired it up again. and it worked!

it turned out that the radio operator’s cabin was sitting right atop the industrial-strength laundry room of the platform. every time they turned on the laundry, everything in the radio room would vibrate. there was a pretty good chance that our PC would regress to a comatose state every time they did the laundry. i then realized that this was a hopeless situation. can i really blame a user for rejecting a system that was prone to frequent and total failures?

other articles in this series

this blog entry is intended to set the stage for a series of short explorations related to the application of optimization. i’d like to share what i’ve learned over a career spent largely in the business of applying optimization to real-world problems. interestingly, there is a lot more to practical optimization than models and algorithms. each of the the links below leads to a piece that dwells on one particular aspect.

optimization: a case study (this article)

architecture of a decision-support system

optimization and organizational readiness for change

optimization: a technical overview

About the author – Dr. Narayan Venkatasubramanyan

Dr. Narayan Venkatasubramanyan has spent over two decades applying a rare combination of quantitative skills, business knowledge, and the ability to think from first principles to real world business problems. He currently consults in several areas including supply chain and health care management. As a Fellow at i2 Technologies, he tackled supply chains problems in areas as diverse as computer assembly, semiconductor manufacturer, consumer goods, steel, and automotive. Prior to that, he worked with several airlines on their aircraft and crew scheduling problems. He topped off his days at IIT-Bombay and IIM-Ahmedabad with a Ph.D. in Operations Research from the University of Wisconsin-Madison.

He is presently based in Dallas, USA and travels extensively all over the world during the course of his consulting assignments. You can also find Narayan on Linkedin at: http://www.linkedin.com/in/narayan3rdeye

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_b.png?x-id=4b7c32a0-1264-42f1-b98c-8f7fadffb135)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_b.png?x-id=b7ed0643-f578-4076-8dd2-03de3e329a10)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_b.png?x-id=06f84c1f-59ae-470f-80a9-775c23973a03)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_b.png?x-id=b6e5f10d-f852-4b07-b84b-27f04d69de1c)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_b.png?x-id=6ab2514b-6008-4b68-9e94-b55811ca6159)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_b.png?x-id=500333de-e95f-4c59-b0ac-fd2d0abd18ba)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_b.png?x-id=1f52eb9a-952b-4003-a9db-914a70fad31a)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_b.png?x-id=36a76173-9f53-4f2e-9dc1-53de4f7866bf)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_b.png?x-id=031cdc05-fa64-44f6-b50b-20202cea10eb)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_b.png?x-id=e1e0ee10-1c89-43cf-8c54-1708e72768be)